Low-latency static sites with Scaleway and Cloudflare

For a while now, I'd been searching for a cheap but reliable hosting solution for this website.

The option of hosting with Github Pages and similar services exists and has a minimal barrier to entry, but I like to be in control of my servers, so that I can occasionally use them for other tasks than just purely hosting. For instance, the machine serving this page runs both a Tor relay and acts as a backup for my large but non-sensitive files.

Now, I think I've found a good solution: a €2.99/mo Scaleway plan coupled with Cloudflare for fast page load times worldwide.

Setting up the Server

Scaleway's lowest-tier C1 plan offers 4 baremetal ARMv7 cores, 2GB RAM, 50GB SSD and unmetered 200mbit/s bandwidth for €2.99/mo. (There are x86 plans too, but ARM is cool.) They also offer extra SSD storage priced at €1/50GB/mo. That's a pretty sweet deal, with the downside that their only datacenters are located in Paris and Amsterdam — at least an extra 100ms away for users in North America compared to more traditional hosting options like New York or Montreal.

That's where Cloudflare comes in.

If you're hosting a site, chances are you're already using Cloudflare, or heard of it. In short, it acts as a proxy in front of your site, so that requests to your domain are routed through Cloudflare before hitting your server. This allows Cloudflare to filter traffic and protect you against DDoS attacks, but at first glance it would seem that an extra proxy step would only increase latency to your content.

For dynamic websites, this may well be true. However, if you're running a mostly-static site, you can leverage Cloudflare's edge node caching to speed things up tremendously. By default, Cloudflare will cache typically-static content like images, CSS, JavaScript, etc. — that means that your server would only be hit for the HTML markup of your site, while static content would be served directly from Cloudflare's edge nodes around the world. It's also free.

One upside of running a static website is you can easily get Cloudflare to cache your HTML, too. For most requests, your users would experience only the latency to their local Cloudflare edge node (check here to see yours). In principle, a user from Australia should have the same fast loading time as a user from Canada, despite your server being a cheapo €2.99/mo box in Europe.

Configuring the Site

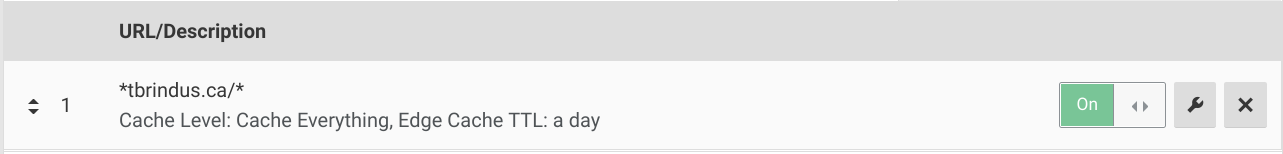

Configuration on Cloudflare's end is easy. Simply navigate to Page Rules and add a new rule targetting your desired pages. Specify Cache Level as Cache Everything to force HTML caching and Edge Cache TTL: a day (or something similarly long), and you're off!

That leaves making your site interact nicely with such aggressive caching. In particular, you probably don't want changes to your site to take an entire day to propagate to your users. This is easy to deal with by triggering Cloudflare's cache purge API whenever your site is rebuilt. You can obtain an API token from the bottom of your profile page, and your site's zone ID from its main overview page, after which purging Cloudflare's cache is just a simple cURL away:

curl -X POST "https://api.cloudflare.com/client/v4/zones/${zone_id}/purge_cache" \

-H "X-Auth-Email: ${auth_email}" \

-H "X-Auth-Key: ${auth_key}" \

-H "Content-Type: application/json" \

--data '{"purge_everything":true}'

This should play well with most static site generators.

You could build upon this to only purge pages that were changed via a filesystem watching process, and so on — for my purposes, purging everything was acceptable.

Wrapping Up

That's all I have to say on this subject, hopefully you found it interesting :)

It's not revolutionary by any means, but I know at least myself and some of my colleagues were surprised at just how effective this approach was to lowering page load times while saving on hosting bills.

Till next time!